Vertex AI is a comprehensive platform used by developers and data practitioners (or, if you prefer, experts in Data Science or data analysts) to accelerate the deployment of machine learning models. It is a fully managed infrastructure that allows you to both train and deploy ML models and AI applications.

In this article, you will learn how Vertex AI, available in Google Cloud, allows you to handle the entire machine learning workflow, from data management to forecasting.

Table of contents

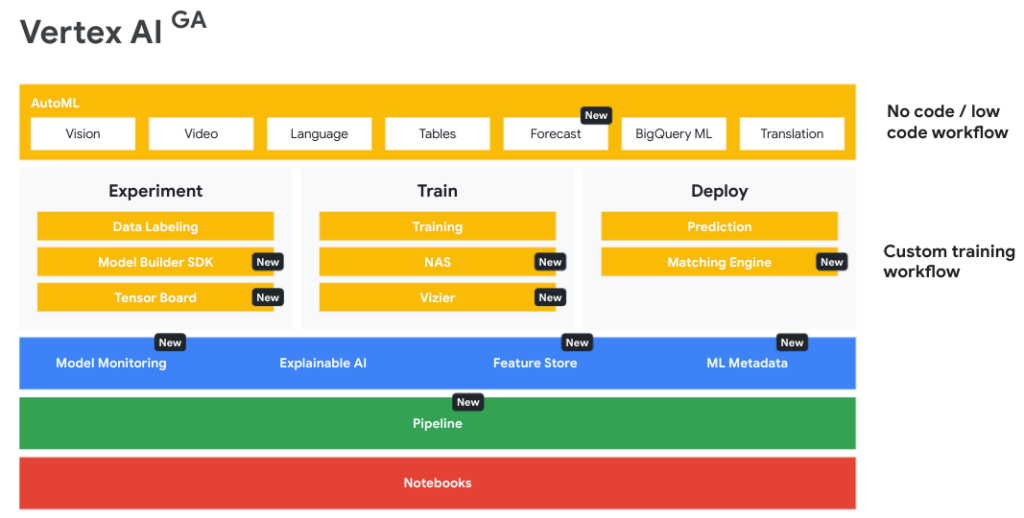

Vertex AI overview

Even if you’re not a seasoned programmer, and regardless of your experience with machine learning and artificial intelligence, Vertex AI can help you improve applications and user experience by utilising previously collected data. Google experts claim that by creating Vertex AI, they have equipped their users with a versatile platform suitable for both beginners and advanced AI practitioners.

One can also define this platform as a broader workspace, a common set of tools that combine areas such as data engineering, data science, and ML engineering, allowing for more efficient model building and deployment.

Benefits of using Vertex AI

The three main benefits of Vertex AI are:

- Building models with Generative AI

- Reduced training time for faster deployment

- Simpler model management.

Vertex AI allows you to create Generative AI models, by giving you easy access to pre-trained models through a user-friendly API in the Model Garden.

For data analysts, using Vertex AI is an effective tool that speeds up the process of training, fine-tuning, and deploying ML models. Shorter training time also means lower costs. This is possible thanks to an optimised artificial intelligence infrastructure.

Without Vertex AI, specifically without tools like Vertex AI Pipelines or Vertex AI Feature Store, managing models would be a complex and time-consuming task. The mentioned MLOps tools streamline the deployment of machine learning pipelines.

What does the ML workflow look like?

A typical machine learning workflow consists of three parts: data prep, model training, and evaluation & deployment.

Phase one: Model preparation

The first phase, known as the preparation phase, includes:

- Data acquisition

- Data analysis

- Data transformation.

Managed Datasets are used for proper data preparation. They help manage data in a central location, create labels and annotation sets. Another benefit of using Managed Datasets is the ability to compare the performance of models created using AutoML with custom ones. Another benefit is data stats and visualisations, as well as automatic data splitting into training, testing, and validation sets.

Vertex AI supports various types of datasets:

- image

- tabular

- text

- and video datasets.

All of the above, except for tabular datasets, are passed to the application in the JSON Lines format. In the case of Tabular Datasets, Vertex provides data to the training application in CSV format or a Uniform Resource Identifier (URI) to a BigQuery table.

Phase two: Training

The second phase of a typical machine learning workflow consists of:

- model design/selection,

- model training.

This stage follows the previous one and it is when you need to make a choice which method to use to train your model. Choose AutoML if you prioritise time, for example, due to the need for quick prototyping of models or verification of new datasets.

However, sometimes a more hands-on approach in model training is advisable. For instance, if you want your AI model to e.g. make the best possible recommendations to your online customers, it would be great to train it on a specifically curated set of data.

Oftentimes you can achieve better results through custom training as an alternative to AutoML. This way, you can optimise the training application for the desired outcome and have control over the algorithm throughout the training process, including the option to develop your own functions.

A yet another approach is to use BigQuery ML. In this case, using SQL commands, you can quickly create a model directly in BigQuery, where your data is stored, and later use it for batch predictions.

Which training model to choose? That depends on your skills and experience, as well as the perimeters your model is required to meet. The table below will help you choose the right option for you.

| AutoML | Custom training | BigQuery ML | |

| Do I need data analytics experience? | No | Yes | No |

| Do I need programming skills? | No | Yes | Yes, you need SQL programming skills. |

| How much time does model training take? | Little | More, because of extended model prep | Little |

| Are there limits in defining machine learning goals? | Yes, you need to choose one of predefined AutoML goals. | No | Yes |

| Can I optimise the model manually? | No | Yes | No |

| Can I control the training environment? | Yes, to a limited extent. | Yes | No |

| Are there any data size limits? | Yes | No, except for Managed Datasets. | Yes |

Phase three: Evaluation and deployment

The third stage involves three parts:

- Evaluation

- Deployment

- Prediction.

To assist us in the evaluation process, Google provides Explainable AI. It is a set of tools and frameworks that help us better understand and interpret the predictions generated by machine learning models.

If you are satisfied with the performance of the newly trained AI model, the next step is deployment. This can be done either through the Google Cloud Console or via the API. This is also the point where scaling begins.

It could seem that our work with the AI model is done. However, the ultimate goal is to obtain predictions, which can be categorised into:

- Online predictions

- Batch preditctions.

For online predictions, requests are sent synchronously to the model endpoint. They are useful in situations where quick inferences are required or when responding to input data from an application.

On the other hand, batch predictions are asynchronous requests that allow us to receive predictions directly from the model without deploying it to an endpoint. They are used in situations where immediate responses are not necessary.

Who uses Vertex AI?

Vertex AI, being a leading gateway to harness the capabilities of artificial intelligence and machine learning, attracts interest from almost every significant industry, from retail and finance to the broader manufacturing sector, including the automotive industry.

Wayfair, a manufacturer of furniture and home accessories, has increased the speed of model training by up to ten times by using Vertex AI. The popular platform Etsy, which connects people creating, buying, and selling unique products, has reduced the time from idea to ML experiment by half.

Companies in the retail sector, such as Lowe’s and the Brazilian company Magalu, are able to better determine their inventory needs by leveraging the features available in Vertex AI.

For Seagate, a manufacturer of HDDs, using models created in Vertex AI allows for better prediction of disk failures, with AutoML models achieving up to 98% effectiveness. The Japanese branch of Coca Cola (Coca Cola Bottlers Japan) tracks data from 700,000 vending machines using Vertex AI and BigQuery, enabling them to predict the most profitable product placements.

Staying in Japan for a moment, Subaru, a Japanese automobile manufacturer, utilizes the capabilities of Vertex AI to reduce the number of fatal accidents involving their vehicles.

Vertex AI Functions: MLOps in One Place

Describing what Vertex AI is and how it works, we have already mentioned some of its key functions, such as Model Garden and Explainable AI. It’s time to gather them in one place and briefly describe them.

Model Garden

Available within Vertex AI, it is a repository of models created by both Google and partner companies.

Generative AI Studio

This managed environment facilitates the interaction, fine-tuning, and deployment of models in a production-ready environment.

AutoML

A tool that enables automatic training of Google models, allowing you to obtain results faster.

Deep Learning VM Images

This tool allows you to create virtual machine image instances using the most popular AI frameworks on the Compute Engine instance. It ensures software compatibility without the need to worry about it.

Workbench

It is a fully managed and scalable computational infrastructure based on the Jupyter platform. It provides a secure, isolated environment where data analysts can perform all machine learning operations, from experiments to deployments.

Matching Engine

This highly scalable service allows for vector similarity matching while ensuring low costs and low latency.

Data Labelling

Proper data labeling is essential for machine learning. Vertex AI Data Labeling significantly streamlines this process.

Deep Learning Containers

Containerisation extends to AI as well. With Vertex AI Deep Learning Containers, you can quickly create and deploy models in a portable and consistent environment.

Explainable AI

As mentioned earlier, Explainable AI enables better understanding and trust in the predictions generated by the model through integration with other functionalities such as Vertex AI Prediction, AutoML Tables, and Vertex AI Workbench.

Feature Store

It is a fully managed repository of ML features for sharing and reusing ML functions.

Vertex ML Metadata

This feature allows for artefact tracking and lineage tracking in the machine learning workflow.

Model Monitoring

With Vertex AI Model Monitoring, it is possible to monitor the model through alerts that warn of incidents related to model performance, including data drift and concept drift.

Neural Architecture Search

This functionality, based on Google’s research in AI, allows for creating new model architectures and optimising them for latency, power, and memory.

Pipelines

This function enables the creation of pipelines using TensorFlow Extended and Kubeflow Pipelines.

Prediction

Using a unified deployment platform, custom models trained in TensorFlow, scikit-learn, XGBoost, BigQuery ML, and AutoML can be easily deployed using the HTTP protocol or batch predictions.

Tensorboard

You can use this tool to visualise and track the progress of machine learning experiments. Vertex AI Tensorboard generates text, images, and audio data.

Training

If you need greater flexibility, this service provides a set of pre-built algorithms that allow you to incorporate your own code for training models.

Vizier

This functionality encompasses optimised hyper-parameters for maximising prediction accuracy.

Getting Started with Vertex AI

Now that you know what Vertex AI is, who uses it, and what its core functionalities are, it’s time to take your first steps. If you haven’t used Google Cloud services before, remember that Google offers $300 in credits to start using their cloud platform.

The pricing for services available within Vertex AI can be complex, so it may be helpful to seek assistance from an official Google Cloud partner. By reaching out to experts from FOTC, you will not only gain knowledge about the costs of individual services but also receive substantive support on how to optimally utilise them to achieve desired outcomes.