The generative AI arms race is afoot. In an effort to beat the competition to the market, tech giants are funnelling billions into AI research hoping to come out victorious from the generative AI revolution.

Generative artificial intelligence is already capable of creating content: textual, visual, audio and video. Even if video generation still requires refinement, the articles, posts and summaries that AI algorithms produce sound extremely convincing, practically indistinguishable form human-created content.

Table of contents

How does generative AI work?

First off, AI models are not a new thing, even though they may appear to have emerged very recently. We’ve had user-facing AI-driven software for years now. The entire search engine model is AI-powered, as are dozens of services, like Google Assistant that analyses human speech in real time and comes up with the most suitable response.

Even Bard, Google’s response to ChatGPT and Microsoft’s Copilot and other generative AI models is the result of years and years of work. Generative AI technologies may appear novel, but the real novelty is in how we approach them.

Even though building and training neural networks for specific tasks (e.g. beating masters at chess and Jeopardy!) has been done mostly using human-supervised training, it wasn’t until we allowed AIs to train one another that we started achieving truly spectacular results. Which is what ushered the new era of generative AI.

The two most common training methods for generative neural networks are:

GANs – Generative adversarial networks

In short, GANs consist of two neural networks: one designed to deceive the other. Imagine a game where a skilled opponent creates a replica of a known painting.

That’s the generator‘s job: to generate new images that are impossible to distinguish from the real ones. The other network, called the discriminator, is trained to tell whether the set of data (e.g. image generated) is genuine or a product of the generator’s creativity.

The generator is rewarded every time it manages to deceive the discriminator. But the discriminator is getting better too. Over time, it perfects its ability to find the smallest discrepancy, the tiniest fault and tell which content has been human generated and which is the generator’s “noise”.

With a trainer as good as that, the generator has to become a master at image creation: the best forger imaginable. This way the pair of “adversaries” create a generative AI model that can rival human creativity.

By stacking two GANs together (StackGAN), scientists managed to train the first neural networks than create images from text descriptions. And the more data they ingested, the more realistic they became. This is how AIs such as DALL-E, Stable Diffusion and Midjourney were born.

GPTs (Generative Pre-Trained Transformers)

The similarity to ChatGPT is not accidental. This is what led to the creation of the app that has surpassed all other applications in terms of user adoption. It cross the one-million mark of users within five days. That’s right. Five. Days.

Basically, a Transformers are a type of recurrent neural networks that can figure out the context of sequential data. It doesn’t just rely on statistics to figure out what word could come next. Transformers actually analyse the relationships between words, from the smallest unit, to a wider context.

First presented in a 2017 academic paper (“Attention is All You Need”, Vaswani et al., 2017), the transformer architecture transforms input sentences into output sentences by using a mechanism called “self-attention”.

Self-attention is how we imagine a super-intelligent alien learning one of our languages: by figuring out how words relate to one another in a sentence.

The primary objective behind training GPTs was to create reliable translators from one language to another. It soon became clear they could use the same set of skills to:

- recognise speech,

- summarise text,

- generate text,

- answer (increasingly complex) questions,

- create code (after all, code is nothing more than a very regular artificial language).

Altogether, this type of artificial intelligence is referred to as Large Language Models (LLMs). We will talk about them in more detail later.

What are the examples of generative AI tools?

As mentioned before, we can distinguish several types of generative AI models that are currently available.

Image generation

DALL-E, Midjourney and Stable Diffusion are the most commonly recognised names right now. They create images based on text descriptions (called prompts). Prompts can include the object(s) we wish to include, as well as the style, colour palette, saturation, and many other directions. Each new release adds new features to the existing capabilities.

AI generated art has become quite controversial, given the fact that many artists’ works have been used as training data in these models, without attribution.

Text generation

ChatGPT came as the first fully viable LLM, and – as mentioned earlier, took the market by storm, reaching a million users under a week. Google and Microsoft (as well as other tech giants) are currently trying to carve out a niche for themselves, by integrating their generative AI systems (Bard and Co-pilot) in their search engines. There are a lot more generative AI models in preparation and we may soon see a slew of new names pop up.

These models are currently garnering the most attention, as they have already been replacing human workers, and may soon become a real game changer in business. Companies all over the world are implementing generative AI models seeking to gain an edge. According to Goldman Sachs, generative AI may increase global GDP by 7%.

Let’s take a closer look at text generative models are used and what we might expect.

Text Generation with AI

Generative AI models, and LLMs specifically, rely on large, pre-trained weighted models that generate a text response to a prompt, for example: “Name the most common food allergens.” The response would like come in form of a list that would include “peanuts” and “shellfish”, plus a brief description of where they most commonly appear and how dangerous they can be.

LLMs are AI models that are pre-trained with vast datasets and can generate natural-language utterances that are virtually indistinguishable from human generated content.

Opening the Black Box

Arguably the main thing that generative, prompt-driven LLMs have changed is our perception of AI technology. As Microsoft’s CEO Satya Nadella pointed out, the real difference between generative LLMs and the multitude of AI-powered software we have known for years is that we now interact with them and have almost direct access to the AI-driven decision making process. “It is no longer on autopilot,” Nadella said in an interview with Andrew Ross Sorkin on NBC News.

This means that we have finally gain an insight into how AI technology actually works. The millions of decisions based a model trained on swathes of data over months no longer happen in the background. Because they process and interface with humans via natural language, we can see the decisions happen as we speak, or type.

How is generative AI changing creative work?

Because we get to see the “inner workings” of machine learning models while using generative AI, an entire new skill has emerged. Prompt design. It may seem like a joke at first, but being able to “talk” to a generative AI model with ease and efficiency may become an indispensable skill.

And this is also where the consumer-facing generative AI models have diverge from business-facing applications. Like with any type of software, there is a B2C version, which is designed to be as straightforward as possible. This is the reason behind the design of chat bots like ChatGPT: a single dialogue box. Question and answer. Prompt and response.

For more advanced users, simplicity is not as important as getting the best results. Even if it requires more training.

Google generative AI models

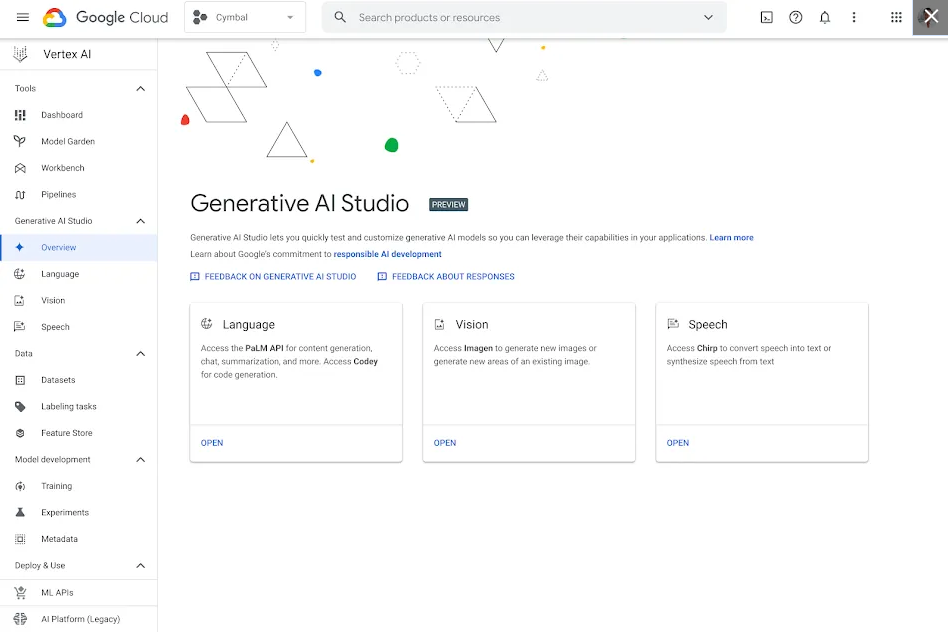

Apart from adding a summary-producing generative AI tools to its search, Google is unveiling its rapidly expanding suite of generative AI models within the Google Cloud platform. Available in the Vertex AI section of the cloud console, generative pre-trained transformer models can generate text in a variety of parameters.

Large language models (LLMs)

Unlike generative models intended for everyday search, summarising, translating and writing copy, like Bard or ChatGPT, Vertex AI LLMs don’t rely only on a simple: prompt-response model, where the user has little say over how the model generates the answer. Instead, they provide the user with a range of fine-tuning tools to get the most desired results.

Below, we will focus on the generative LLMs available on Google Cloud.

Prompt Design with Vertex generative AI

The first thing any generative AI user realises is that there is an art to designing the prompts that are expected to yield the desired result. You can go with a single sentence, and rely on the language model to provide a suitable response. Or you can help it get there. Vertex AI LLMs are designed to give the user as much impact on the model as possible, without requiring any programming expertise.

Zero-shot prompting

This the simplest one, with just one prompt and no additional “tweaks.” Like the allergen prompt above. You can use it to create a quick response, e.g. generate a brief summary of a report, simplify technical or scientific lingo to plain language etc. While this is as far as many LLMs go, it is only the starting point in Google’s Vertex AI LLM.

Few-shot prompting

You can “teach” your model to provide content that suits your purposes better than a general response. To that end, you can use a structured template of an effective prompt creation tool, specify words that the model needs to include and which words it should omit. Or go a step further and tell the model which topics to focus on and which to skip in the response.

Train by example

You can also provide the model with several examples of what type of answer you expect. This is very effective in obtaining results as close to your desired ideal as possible.

Experiment with model parameters

You can go even further and tell the model what type of answer you expect. You can play with the parameters to get any result between the most likely response and the most surprising, and “original,” one.

More about AI tools available on Google Cloud: