Scalability, high performance, the effectiveness of resource consumption and relatively low cost; these are just some of the advantages that containerisation offers. Today we explain what containers are and what your business can gain from them.

Scenario #1: A new application works flawlessly in the local environment, but right after deployment on the server, it starts to crawl with bugs. There can be many reasons for this: different versions of libraries, operating system incompatibility, or server configuration errors.

Scenario #2: Your team wants to quickly fix one of the app’s features. Unfortunately, stopping an entire application is required to do the update, which will be inconvenient for the users. And what if the implemented change will cause a crash in the production version?

Scenario #3: The popularity of your app has grown tremendously recently, and the current infrastructural solution isn’t as efficient as it used to be. There are drops in the availability of the application, the infrastructure maintenance costs are rising, and the development team struggles to keep up with patching.

There could be many more such scenarios, and in the majority switching to containers can contribute to a happy ending.

Table of contents

What is containerisation?

Containerisation technology is very similar to the idea of containers used in the logistics industry. A virtual container is nothing more than a ‘package’ usually housing an application or part of it, along with a set of files necessary to run the code.

What is noteworthy is that the application in a container is not strongly dependent on the type and configuration of the infrastructure. It can be easily moved, replicated, or deployed in various environments (on-premise, in a public cloud, hybrid or multi-cloud).

Returning to an analogy from the transport industry, a physical container is easy to transfer from a ship to a rail car or truck trailer and deliver safely to its destination.

Contents of a container

In the IT industry, containers deviate from the traditional monolithic architecture to a more agile one. Thanks to containerisation, the application can be divided into smaller pieces, so-called “microservices”. This speeds up the development process; DevOps teams can introduce changes in a single microservice without needing to update the whole app or worry about bugs shutting down the entire system.

See also: What is microservice architecture, and why it’s worth implementing?

Although, a microservice is not a container. Microservices are about software design – an architectural paradigm – while containers are about packaging this software for deployment. Inside a container can be put the actual code of the application or a part of it, the required libraries, processes, dependencies, binary files and the engine itself to run the contents.

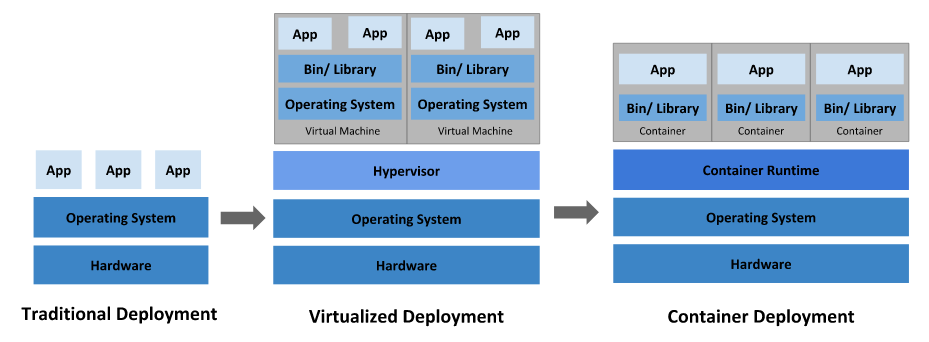

Containerisation vs virtualisation

Containerisation and virtualisation may seem similar at first glance, but they are two completely different technologies.

In a typical virtualisation architecture, everything starts with the lowest level of infrastructure – the physical server. On the second level, there is installed a host OS. Above it runs a hypervisor – software that supervises the operation of virtual machines. On top, there can be many isolated VMs.

The hypervisor allocates the physical server resources (CPU, RAM, disk space) to the virtual machines. It’s also in charge of installing and maintaining the VMs operating systems – the so-called guest OS. Both guest OS and hypervisor consume the physical hardware’s computing power, leaving fewer resources for applications.

With containerisation, there’s no need for a hypervisor and virtual operating systems. By not waiting for the hypervisor and guest OS to initiate, the speed of containerised application is greater. It starts almost instantly, thanks to all the containers in one instance being run by a single container engine.

Benefits and drawbacks of containerisation

The most significant advantages of containerisation include:

- Portability. A container holds everything an application needs (source code, libraries, runtime environment). It can be freely moved and run anywhere, regardless of the infrastructure type or configuration.

- Less resource consumption. Unlike virtualisation, a container needs less computing power because it doesn’t have an operating system layer. The size of a typical virtual machine is calculated in gigabytes, while the size of a standard container is a matter of megabytes.

- Speed. Creating, running, replicating and deleting a container typically takes seconds. Similar operations on a virtual machine take much longer and consume more resources, which also translates into the cost of the service. With containers, DevOps teams can work more efficiently on new features and updates.

- Scalability. The container environment also wins in the horizontal scaling competition. Depending on demand, additional instances can be instantaneously launched and intelligently managed by the orchestration software.

Containerisation also comes with challenges and is not the solution to all problems. Here are the most severe drawbacks of which it is good to be aware:

- Performance and complexity. Containerisation creates a level of complexity that is not present when running applications directly on the server. While it can increase overall performance and is better at horizontal scaling than virtual machines, it also requires careful analysis of the interaction with the operating system and available resources.

- More for cloud than on-premises. Containerisation may be unnecessary when developing an application that will run locally, e.g., in a desktop environment.

- Higher security, but at a higher cost. Containerisation can increase the app security level, but it requires additional investments and know-how of proper environment configuration. The risk is significant, and mistakes made at the beginning can have unpleasant consequences, so the first step should be to audit the company’s IT environment.

Docker, Kubernetes, Rancher – containerisation solutions

To implement containerisation in a company, you must start with a container platform. Once the number of containers grows, it’ll be time for an orchestration solution – a tool that groups containers into manageable clusters, and automates deployments, scaling or allocating resources.

One of the most popular container platforms is Docker, which has been around since 2013. It’s an open-source solution that, in a nutshell, is responsible for creating image files of a specific function or application (Docker Image) and running it using an engine (Docker Engine). Originally it was designed for Linux, and now Docker supports Windows and macOS as well.

It would help if you had an orchestration tool to manage the growing number of Docker containers efficiently. This is where Kubernetes goes in. It was invented by Google and made public under the Apache 2.0 licence in 2014; the solution is currently maintained and developed by the independent Cloud Native Computing Foundation (CNCF). Kubernetes automates the deployment and management of Docker container clusters in any environment – on-premise, public, hybrid or multi-cloud infrastructure. The container orchestration service in the Google Cloud Platform is Google Kubernetes Engine, GKE for short.

As the container environment scales and the number of Kubernetes clusters increases, you will need another tool, such as Rancher. It was designed to manage multiple Kubernetes clusters at scale in a distributed environment. Among Google Cloud services, Rancher’s counterpart is Anthos – a comprehensive platform for managing application deployments (both in containers and on virtual machines) in hybrid and multi-cloud environments.

Take advantage of containers in the cloud

Contact us if you are wondering whether moving applications to containers is a good strategy for your organisation. FOTC certified specialists will help you assess the potential of the investment, select a solution and plan a strategy preceded by a detailed analysis. We’ll support you in the migration process and subsequent operation of the cloud services.