Data is the new gold – at least for all companies and organisations with views to expand. A business that is aware of the power hidden inside data carries out process analysis and introduces data-driven changes and improvements. Some go even further – with the technology at their disposal they predict trends, probable market changes and the potential consequences of business decisions they have yet to make.

As your business grows, so do the swathes of data you collect – gigabytes turn into terabytes and petabytes. To keep costs and the time it takes to generate a report at a reasonable level, you need the right tools. BigQuery can be a huge help with both. As the name indicates, it is a service dedicated to analysing Big Data, the fuel for so many analytics tools, including the popular Google Analytics.

What is Google’s BigQuery?

It’s a serverless, scalable cloud data warehouse service. It allows you to service millions of queries and perform advanced analytics on petabytes of data in SQL, without worrying about costly maintenance of advanced infrastructure, scaling up or down and balancing traffic.

BigQuery is one of the services available on Google Cloud. Your datasets are stored and processed on a stable, secure and scalable Google Cloud (here you can find out what is Google Cloud and how it can support your business). Using this service you can create your own analytics tool – for example a data warehouse – which can track progress and changes within and outside the company. You can take advantage of the built-in Machine Learning models within BigQuery, which offer a range of predictive and analytics tools for different business scenarios.

Serverless technology

BigQuery is a serverless service, which means that maintenance is fully automated. You don’t need to worry about maintaining the physical data centre, updating software, setting scaling rules, balancing traffic and complex security principles. Google Cloud, the service provider, makes sure they work seamlessly, while you can focus on running analytics without any distractions. According to an ESG study, the total cost of ownership (TCO) of a serverless BigQuery service is 26-34% lower over the course of three years compared to other available analytics tools.

Fast analysis

While databases are best suited for a “small query / fast response,” data warehouses are far more efficient when doing complex analysis on vast loads of information. BigQuery needs seconds to process terabytes of data, and about 3 minutes for a petabyte. If you have a load of historic and current data, analysing it with BigQuery will take up to several minutes. Other data analysis system would need even a few hours.

No source code changes

In order to use BigQuery, you don’t need to make major changes or alter the source code. That’s because BigQuery supports the ANSI SQL:2011 standard and offers free programming interfaces ODBC and JDBC.

Automated backup

The tool creates an automated backup and keeps the history of changes for 7 days. You can easily compare your results with the previous version or restore data.

Analysing data from different sources

You can analyse data from a variety of sources using BigQuery –from Google Marketing Platform, Google Analytics, YouTube and hundreds of external SaaS applications. You can import data manually or build a pipeline to automatically source data and clean it before sending it to BigQuery.

Multi-cloud analytics with BigQuery Omni

BigQuery Omni enables analysing data from other public clouds, without leaving the BigQuery interface. Its architecture is easily scalable and can handle significant workload. It uses a query engine called Dremel to access AWS or Azure clouds so there is no need to transfer data between the clouds.

Built-in Machine Learning (ML) models

One of the services available is BigQuery ML which is dedicated to creating and developing machine learning models by using standard SQL queries. BigQuery ML speeds up the development of ML products by reducing the coding and data transfer to a minimum. You can also integrate the service with Vertex AI and TensorFlow to create and train your own ML models.

Business Intelligence (BI) support

BigQuery BI Engine is an in-memory analysis function which analyses even large datasets almost in real time. BI Engine is integrated with data visualisation tool Data Studio which presents results in form of clear dashboards. The service also supports ad hoc reports which encompass a sliver of the database. To generate these reports you don’t need to involve your analytics or IT trams.

Find out more:

- Cloud SQL – a cloud database. What is it and why is it worth using?

- What is Google Cloud Storage, and how to use it?

BigQuery business use cases

Here are some examples how companies use BigQuery capabilities:

Optimising logistics

UPS collects data on hundreds of thousands of parcels: their weight, size, and location. By using machine learning and data analysis, the company can pinpoint the most efficient way of loading delivery vans and containers. The system also allows UPS to prepare for periods of increased traffic, like Christmas.

Cinematic success

20th Century Fox uses Google Cloud, including BigQuery and machine learning. It creates preference profiles for their clients, and analyses movie trailers to determine how popular with audiences a new production will be.

Reading preferences

Safari Books Online is an internet library serving millions of users, comprising over 30,000 books and films. Safari Books Online uses BigQuery to match titles to the preferences to individual readers.

Stock price forecasting

Dow Jones (DJIA) is one of the largest stock exchange indices, which includes giants such as Apple, Amazon and Boeing. By using BigQuery and ML, Dow has created Data, News & Analytics Platform (DNA), which enables companies to run scenario simulations and predict how events (including floods, hurricanes and other natural disasters) will impact the index.

Personalised playlists

Spotify uses BigQuery to offer each of its 400 million users personalised playlists that can change every few hours depending on the music the user is listening to.

BigQuery costs – how much does cloud data analysis cost?

The standard payment model for cloud services is pay-as-you-use, which means you pay only for the resources you actually employ. BigQuery pricing consists of two major elements: data analysis and data storage costs.

Data analysis costs

You can choose between two payment models for data analysis:

- on-demand – a flexible model on the number of bytes processed. The first terabyte of data is analysed for free, the following cost $5 per 1 TB. This is a good model for you if you plan to analyse data sporadically;

- flat-rate – this is a model where you acquire slots and reserve specific vCPU resources that are later used for data analysis. You can reserve a minimum of 60 seconds (flex plan), for a 30 days (monthly plan) or for 365 days (annual plan). The further ahead you plan, the lower the price. This is a solution for companies that want to use the service frequently or continuously. The flat-rate depends on the time frame and the number of slots. You can find more information about this payment plan in Google Cloud pricing documentation.

Data storage cost

Data storage is also done in two tiers:

- active storage – for tables and partitions that have been modified over the past 90 days. The first 10 GB per month is free, the regular price is $0.020 per GB;

- long-term storage – for tables and partitions that gave not been modified in the last 90 days. This type of storage is 50% cheaper than active storage: $0.010 per GB, while the first 10 GB a month is stored for free. The storage class changes automatically to long-term after 90 days without data modifications.

Additional costs can encompass ingesting and exporting data, sending data between locations or access through Storage Read API. In order to receive an exact estimate, use cost calculator or consult Google Cloud pricing documentation.

BigQuery tutorials

Your first steps in the service

To start using BigQuery, you need a Google Cloud account (here you can read about the top 20 advantages of Google Cloud). You will also need to know SQL.

Go to your Google Cloud console.

Choose the project you want to work on. The list of projects is available from the drop down menu next to the Google Cloud header.

Once you’ve selected the project, turn on Cloudshell in the top right corner.

You will see this view:

Reading the database

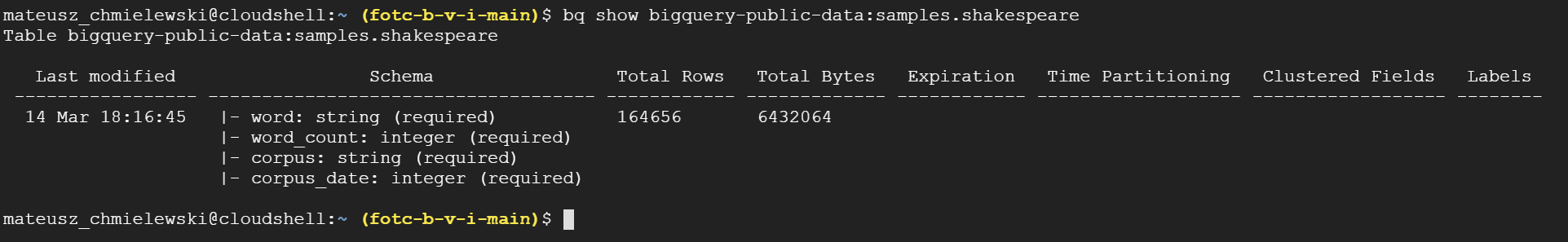

To get an overview of the database (data structure, number of rows, size) use this command:

bq show bigquery-public-data:samples.shakespeare

And this will be the result of your query:

In bq commands we will be using a flag –use_leagcy_sql – it sets standard SQL as the query language.

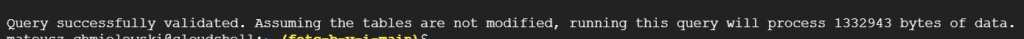

To validate and verify a query, all you need to do is add a dry_run flag. This is what a sample query with this flag looks like:

bq query --use_legacy_sql=false --dry_run \ 'SELECT word FROM `bigquery-public-data`.samples.shakespeare LIMIT 5'

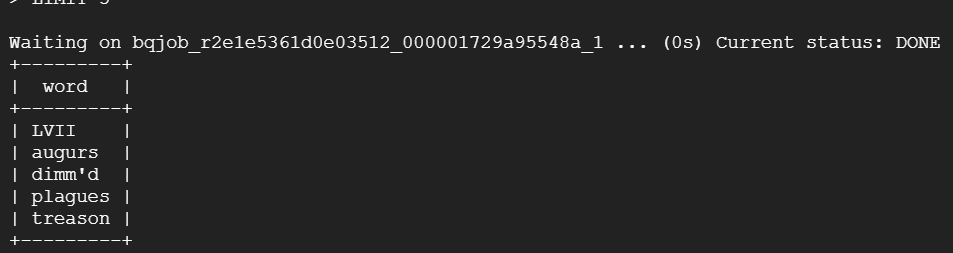

If the query is correct, you will see its result by removing the flag –dry_run

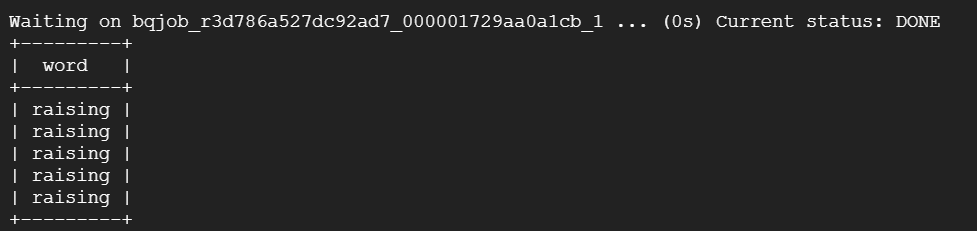

bq query --use_legacy_sql=false \ 'SELECT word FROM `bigquery-public-data`.samples.shakespeare LIMIT 5'

This is the result of the sample query:

Data selection

To separate specific data from the records, you can use the where clause that will select data for a specific value, rule or regular expression. If, for instance, you want to select only records that contain the word raising in the word column, all you need to do is use the following query:

bq query --use_legacy_sql=false --dry_run \ 'SELECT word FROM `bigquery-public-data`.samples.shakespeare WHERE word = raising LIMIT 5'

First you need to validate the query.

Then run the following command without –dry_run:

bq query --use_legacy_sql=false \ 'SELECT word FROM `bigquery-public-data`.samples.shakespeare WHERE word = raising LIMIT 5'

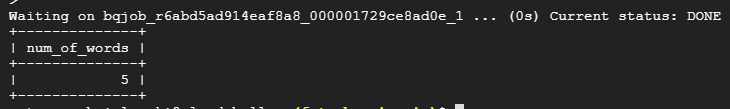

Counting records

If you want to check how many records with this word the database contains, use the aggregating function COUNT(record you want to calculate). The query should look like this:

bq query --use_legacy_sql=false \ 'SELECT COUNT(word) as num_of_words FROM `bigquery-public-data`.samples.shakespeare WHERE word = raising’

Every table can be renamed using the keyword as. In this case we haved a descriptive name aggregated in the column. The result of the query is as follows:

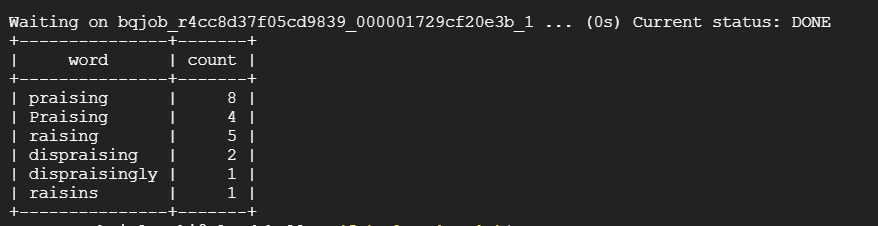

Let’s assume you want to find all records containing the word raising. In this case use the keyword LIKE, which will allow you to search by a given key.

If you place the % character before the word you are searching for, the system will include prefixed results to, e.g. dispraising. If you add the percentage symbol on both sides %raising%, you will get results including both prefixes and suffixes, e.g. dispraisingly.

When you have more than one column with an aggregating function, you need to indicate with GROUP BY which column you want to group the columns by.

bq query --use_legacy_sql=false \ 'SELECT word, COUNT(word_count) AS count FROM `bigquery-public-data`.samples.shakespeare WHERE word LIKE raising GROUP BY word'

This is the result of the query:

Test BigQuery for free

It is best to test BigQuery’s capabilities in practice. If you want to wet your toes and see how Google Cloud solutions (including BigQuery analytics) can benefit your company, contact a certified Google Cloud Premier Partner.

FOTC experts will help you discover BigQuery potential as well as provide support with your data analysis in the cloud.